Federal AI Action Plan RFI - AI Analysis of Responses from Members of the General Public

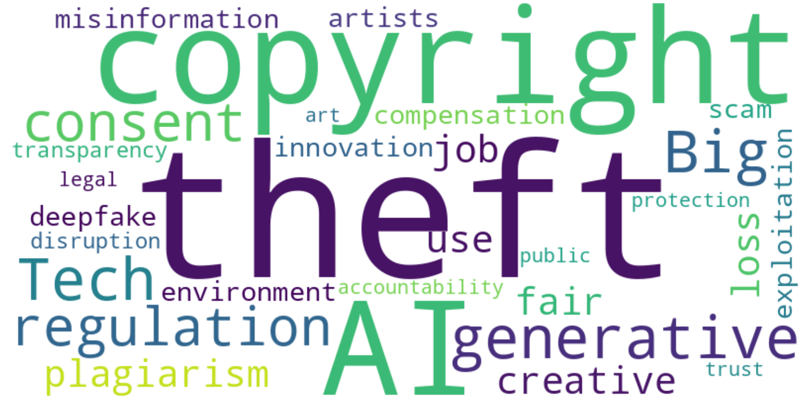

A deeper dive with a more detailed analysis for this category of respondents, complete with a sentiment analysis, word cloud, list of topics common across many responses, recurring themes among recommendations to the government, and any notable observations.

4/27/20254 min read

The vast majority of responses to the federal AI Action Plan RFI from individual members of the general public reflect deep skepticism and concern about the current direction of AI development—especially generative AI. A significant portion of the responses highlight concerns over big tech's role in exploiting creative works without proper compensation or consent, fueling a call for stricter regulation and stronger legal protections for intellectual property. Respondents emphasize the need for transparency in how AI models are trained and the data they use, often expressing distrust in the motivations of AI companies and their potential harm to the creative industries.

Despite the strong opposition, there are small pockets of optimism, particularly in the realms of medical and scientific research, where AI is seen as having beneficial applications, albeit still requiring careful regulation. However, the consensus remains that AI's rapid development without sufficient safeguards poses a significant risk to creators' rights, job security, and societal values. The public is calling for clear policies that enforce transparency, accountability, and fair compensation to ensure that AI technologies benefit society equitably.

The sentiment across the batches of responses was generally negative towards the current trajectory of AI development, particularly regarding generative AI’s impact on intellectual property, economic stability, and creator rights. Here's a breakdown of key sentiment categories:

Negative Sentiment (~80-85%): Criticism centers around AI companies “stealing” from creators, exploiting work without compensation, and posing economic threats. There were widespread calls for legal protections, transparency, and accountability from AI companies. Many responses use strong language, including terms like “theft,” “scam,” and “plagiarism.

Neutral/Analytical (~10-15%): These responses are often focused on offering policy suggestions or explaining AI's potential, but with caution and skepticism.

Positive Sentiment (~5%): A small portion of responses view AI positively, particularly in the context of medical, scientific, or educational advancements. However, even these responses call for careful regulation.

Below are the most consistently mentioned issues and themes, reflecting what individuals care most about in the debate over AI’s role in society. The following topics were the top 5 most prominently and frequently mentioned across all responses.

Copyright Infringement & Intellectual Property (IP) (~70-75% of responses): Respondents overwhelmingly voiced concerns about AI companies using copyrighted work without consent. There were repeated calls for stricter copyright laws and the enforcement of creators’ rights. Phrases like “AI is theft,” “stealing intellectual property,” and “plagiarism” were common.

Economic Impact & Job Loss (~50-55% of responses): Many responses expressed fears of AI replacing jobs, particularly in creative fields. There was a recurring theme of AI threatening small businesses, artists, and the creative workforce at large. Calls for government intervention to protect workers were prevalent.

Regulation & Legal Frameworks (~40-45% of responses): Respondents demanded stronger regulation of AI, with specific focus on transparency, accountability, and legal protections for creators. Many suggested creating laws that require consent for data usage and enforce fair compensation.

Big Tech Distrust (~30-35% of responses): There was a strong sentiment of distrust toward large AI companies, with accusations of exploitation and disregard for public interests. The term "Big Tech" was often used to describe companies like OpenAI and others involved in generative AI.

Ethical and Moral Concerns (~25-30% of responses): Respondents frequently described AI as “immoral” or “unethical,” citing its potential to dehumanize, spread misinformation, and undermine human creativity. Ethical concerns about the use of data and privacy also appeared here.

Other notable topics that were mentioned less frequently but were nevertheless prominent include concerns about misinformation and deepfakes (~15-20%) -- fears about AI being used to create deepfakes, misinformation, and fake news that could disrupt society and politics -- and issues of environmental impact of AI (~5-10%), mainly centered around concerns about AI’s environmental footprint with respect to energy usage and data center impacts.

Individuals frequently provided concrete suggestions and demands for policy action. There were several key suggestions or calls to action repeated across large numbers of responses:

Copyright Protection & Creator Consent: Many responses advocated for stronger copyright laws, with no exemptions for AI companies, and calls for creators to receive compensation for their work being used to train AI models.

Transparency in AI Development: Recommendations include disclosure of training data, where respondents want AI companies to be transparent about the data they use to train their models, as well as labeling of AI-generated content, where a significant portion of respondents want clear labeling of content generated by AI to avoid misleading the public.

Regulation & Oversight: Recommendations included stronger government regulation to enforce copyright laws, prevent exploitation, and ensure AI development serves the public good, and bans on specific AI models or practices, especially those that enable deepfakes or plagiarism.

Support for Creatives & Job Protection: Many responses suggested job protection policies, retraining programs, and other forms of support for workers displaced by AI.

A number of unique patterns and noteworthy trends emerged from the analysis, offering a more nuanced view of public sentiment and advocacy. Many responses used dramatic language, likening AI to a “disaster,” “scam,” or “atomic bomb.” These expressions were often used to highlight the perceived urgency of addressing AI's impact. A number of responses from artists, writers, and small business owners spoke about how AI's rise directly impacts their livelihoods, often stating that they feel like they are being “erased” by AI systems. Some responses were organized or reflected common talking points that seem to be circulating in advocacy or activist communities concerned about AI's growing role.

To provide some balance to the prevailing tone of most responses, a smaller percentage of responses acknowledged that AI could bring benefits, especially in scientific research, education, or medicine. However, even these responses expressed the need for strict ethical oversight and regulation to prevent harm to society.

Unique Entity ID: RF8CGEK78DY4

© 2025. All rights reserved.

Navigation

#CambioMeansChange

CAGE Code: 9XEK8